AWS and EBS Instance Selection: Best Practices

Purpose

The following information will help to assess the performance requirements, then select and configure the correct instance and backend storage to meet your business requirements.

Symptoms

Prior to deploying our SoftNAS® product, it is important to consider the performance requirements of the application. When evaluating performance requirements, look at the following as these metrics help in selecting the AWS Cloud Instance type and size along with the back-end storage required to service the application:

- Data Read/Write ratio

- Input/Output Operations Per Second (IOPS) profile

- Throughput requirements.

Resolution

There are several aspects to consider when planning your implementation:

Performance Requirement Assessment

Read/Write Ratio and IOPS Profile

Start the assessment by identifying the application’s read/write ratio.

There are a number of typical profiles that may be of use in this assessment.

- User and department file sharing usually has R/W ratio ranging from 70/30 to 80/20. This means that the data will be read 70-80% of the time, while the remaining 20-30% will be writes.

- Web content delivery typically has an even higher read ratio of 90/10.

- Database profiles will vary dramatically depending on the application. Some will have high read profiles, while others have high write workloads. Each read or write activity is considered an I/O operation. AWS has set the maximum I/O size to be 256KB. Larger blocks are handled as multiple I/O operations.

The 256KB maximum I/O size applies to all EBS storage types. It is important to understand the specific R/W ratio and IOPS Profile requirements prior to deploying the SoftNAS® instance. By properly characterizing the application requirements, you’ll be able to effectively select the optimal instance and correct EBS storage type and configuration.

AWS provides tools to measure and trend performance statistics within the cloud. It is available for deployment with any of the AWS instance types and provides the ability to collect and report bandwidth, throughput, latency, and average queue length information.

There are also a number of 3rd party tools available to measure R/W operations at the server level. Many storage sub-systems provide native dashboards and statistics to collect this information. SoftNAS® leverages an integrated and configurable Dashboard to provide these statistics at a glance.

Throughput Requirements

Next, you will want to identify the data throughput requirements for the application workload.

SoftNAS® storage access performance is directly linked to the network performance characteristics associated with the AWS instance used for its deployment. An instance’s “Network Performance” indicates the performance level of the rate of data transfer. Network performance for non-10GbE instances are described as low/moderate/high. These instances are multi-tenant, provide 1GbE network interfaces, and the assigned designations indicate the priority the instance will have compared to other instances sharing the same infrastructure. This means that these instances are not guaranteed to have 1GbE of dedicated bandwidth. Dedicated bandwidth requires an instance with either 10GbE network performance or selection of a dedicated host.

By default, the instance’s network bandwidth is utilized for both SoftNAS® storage services (NFS, CIFS, AFP, iSCSI) and storage traffic (EBS, S3). This means that the required throughput should take into consideration R/W traffic to disk, as well as file and block services.

AWS offers a method to segment this traffic and provide dedicated bandwidth for front-end and back-end traffic through an option called EBS-Optimized. This option provides dedicated network bandwidth for EBS storage, which is separated from the instance’s allocated network bandwidth.

SoftNAS® Considerations

RAM for SoftNAS® Performance

SoftNAS® uses the Zettabyte File System (ZFS) as the underlying file system for our storage controller, which uses RAM extensively for metadata to track where data is stored.

ZFS uses ~50% of an instance’s RAM as Adaptive Replacement Cache (ARC) read cache. SoftNAS® ZFS uses a highly sophisticated caching algorithm that tries to cache both the most frequently used data, and most recently used data. SoftNAS® also includes advanced prefetching functionality that can greatly improve performance for various kinds of sequential reads. All of this functionality benefits from large RAM capacity.

A good objective is to identify the size of the highest active dataset. This might be key files, records, or website content. By selecting an AWS instance that can store this active dataset within ARC, the majority of reads to this dataset will be serviced directly out of RAM, which will provide significant performance benefits.

Additional considerations for RAM should be made if you plan to use deduplication. A good rule of thumb is to add 1GB of additional RAM for each 1TB of deduplicated data. ZFS allows deduplication to use up to half of the remaining RAM after ARC read cache is allocated. This works out to approximately 25% of the instance’s total usable RAM being allocated for deduplication if the feature is enabled. This metric is helpful when calculating the RAM to deduplicated data ratio.

SoftNAS® ZFS Overhead – Storage Capacity Considerations

SoftNAS® uses the RAID options available through ZFS, which is the underlying file system for the storage controller.

Once we attach EBS volumes to the SoftNAS® instance, these volumes are partitioned and added to a storage pool (zpool) for provisioning to NAS volumes that can be accessed over the NFS, CIFS, AFP, and iSCSI protocols. There is an approximate 10% ZFS overhead when creating the storage zpools that must be considered when calculating the raw EBS volume size and usable zpool capacity used for data storage.

When planning out the capacity, it is best to allow for storage overhead for the dataset. ZFS is a copy-on-write file system, which means that it writes new data into free blocks. It is best practice to maintain approximately 20% free space in the zpool. Once the data set reaches 80% capacity, it’s time to add more EBS volumes.

RAID Considerations

As mentioned above, EBS volumes are RAID protected by AWS. These volumes are resilient and SoftNAS® does not recommend adding additional RAID redundancy unless the application requires a higher level of data protection than what EBS already provides. Our standard recommendation is to use RAID 0 based on the performance benefits outlined below.

EBS volume statistics are unique to a single EBS volume. These performance characteristics can be “stacked” by leveraging RAID through the SoftNAS® storage controller. This means that SoftNAS® can aggregate multiple EBS volumes in order to support a much higher IOPS and throughput profile, using RAID 0 striping.

EXAMPLE

- Five 3.334TB General Purpose EBS volumes

- Each with an IOPS profile of 10,000

- Each with a throughput of 160MBPS

Each can be striped together with RAID 0 to support a total of 50,000 IOPS, 800MBps, and raw capacity of ~16.67TB.

This principal applies to each of the EBS types.

AWS Performance Features and EBS Volumes Characteristics

EBS-Optimized Instances

There are many different AWS instance types to select from when deploying SoftNAS®. We support both HVM and PV instances, which allow for flexibility when choosing the correct combination of vCPUs, Memory, Networking, and storage capacity. As mentioned above, a key consideration when selecting the SoftNAS® instance type is the EBS-Optimized option.

“An Amazon EBS–optimized instance uses an optimized configuration stack and provides additional, dedicated capacity for Amazon EBS I/O. This optimization provides the best performance for the EBS volumes by minimizing contention between Amazon EBS I/O and other traffic from the instance. EBS-Optimized instances deliver dedicated throughput to Amazon EBS, with options between 500 Mbps and 4,000 Mbps, depending on the instance type you use.” – AWS

By segmenting the SoftNAS® data services traffic from the backend storage traffic, we are able to provide excellent overall performance and in-turn, improve the application response time and user experience. We highly recommend selection of an instance that supports the EBS-Optimized feature.

Enhanced Networking

SoftNAS® supports the Enhanced Networking feature offered by AWS.

“This feature provides higher performance (packets per second), lower latency, and lower jitter.” – AWS

When deploying SoftNAS® within the AWS environment, it is recommended that you review support for Enhanced Networking when selecting the SoftNAS® instance type to take advantage of this feature. By default, all HVM AMIs are required to support Enhanced Networking.

EBS I/O Characteristics

While EBS volumes provide a consistent experience for both read and write, random and sequential operations, I/O characteristics can still have an impact.

“On a given volume configuration, certain I/O characteristics drive the performance behavior on the back-end. General Purpose (SSD) and Provisioned IOPS (SSD) volumes deliver consistent performance whether an I/O operation is random or sequential, and also whether an I/O operation is to read or write data. I/O size, however, does make an impact on IOPS because of the way they are measured.” – AWS

General Purpose EBS (SSD)

General Purpose EBS disks are RAID protected and function as the storage workhorse for many applications within the AWS environment. All EBS disks are tied to a single availability zone within a Region, which means these disks should be physically located near the SoftNAS® instance infrastructure within the cloud.

Typical use cases for General Purpose EBS storage include:

- System Boot volumes

- Virtual Desktops

- Small to Medium sized databases

- Development and test environments

Volume Capacity Range: 1GB – 16TB

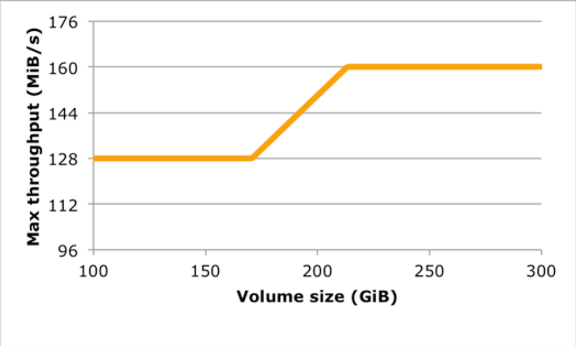

Volume Maximum Throughput: 160MBps

- 128MBps throughput on volumes under 170GB

- 768KBps per GB throughput increase from 170GB to 214GB

- 160MBps throughput on volumes 214GB and larger

Provisioned IOPS EBS (SSD)

These disks are also RAID protected and designed for I/O intensive workloads, specifically applications that are sensitive to storage performance.

Typical use cases for Provisioned IOPS EBS storage include:

- Critical Business applications that require sustained IOPS performance, or more than 10,000 IOPS or 160MBps of throughput per volume

- Applications requiring higher performance and smaller capacity (write log or read cache)

- Large database workloads such as:

- MongoDB

- Microsoft SQL Server

- MySQL

- PostgreSQL

- Oracle

Volume Capacity Range: 4GB – 16TB

Volume Maximum Throughput: 320MBps

- Measured on block size and IOPS as shown on the right.

Volume IOPS: Up to 20,000 IOPS

- Volume Maximum IOPS to Capacity ratio is 30x

- 3,000 IOPS requires a 100GB volume

- 20,000 IOPSrequires a 667GB volume

Latency Recommendation:

“The per I/O latency experience depends on the IOPS provisioned and the workload pattern. For the best per I/O latency experience, we recommend you provision an IOPS to GB ratio greater than a 2:1 (for example, a 2,000 IOPS volume would be smaller than 1,000 GiB in this case).” – AWS

Magnetic EBS (Spinning Disk)

These disks are RAID protected and are the lowest cost per GB EBS option.

Rather than SSD, the storage is backed by magnetic drives. Based on the media and performance characteristics, this storage is ideal for sequential reads where lower cost is important, and lower performance is acceptable.

Typical use cases for Magnetic EBS storage include:

- Cold workloads where data is infrequently accessed

- Scenarios where the lowest storage cost is important

Volume Capacity Range: 1GB – 1TB

Volume Throughput Range: 40 – 90MBps

Volume IOPS:

- Averages 100 IOPS

- Bursts to hundreds of IOPS